Rana Muhammad Shahroz KhanI am a first year PhD student at UNC Chapel Hill where I work on Efficient ML and NLP. I am grateful to be advised by Dr. Tianlong Chen. Before that I did my Bachelors in Computer Science and Mathematics from Vanderbilt University, where I was advised by Dr. Soheil Kolouri and worked in the intersection of Computer Vision and Optimal Transport. During the summer of 2023, I was a Research Intern at Lawrence Livermore National Laboratory in the Machine Intelligence Group working under the guidance of Dr. Jay Thiagarajan, Dr. Shusen Liu and Dr. Rushil Anirudh on Bayesian Optimization for Uncertainty Quantification. In the Summer of 2024, I also had the pleasure of working as a ML Research Intern at HighArc under the supervision of Dr. Ardavan Bidgoli and Dr. Manuel Ladron de Guevara. Email / GitHub / Google Scholar / LinkedIn / CV [01/2025] |

|

Updates

|

ResearchI'm interested in NLP and Generative AI, with hopes of making it efficient. |

|

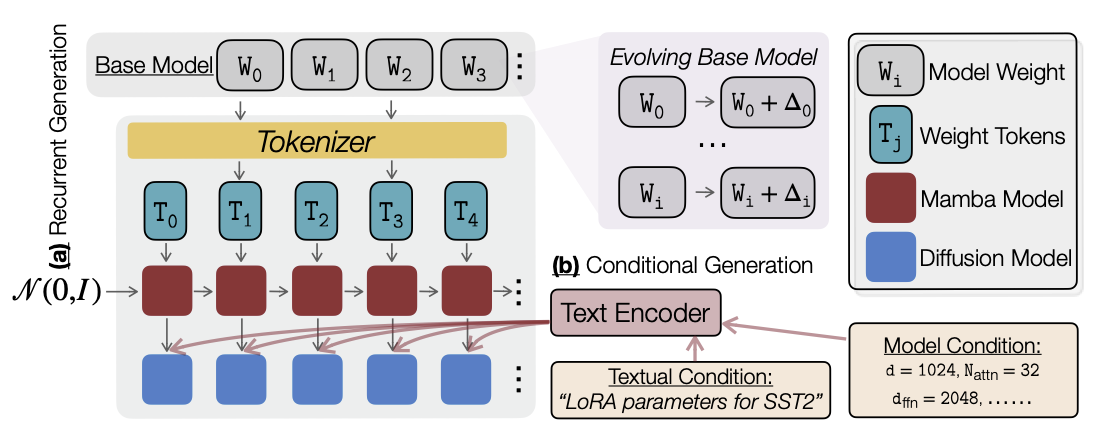

ORAL: Prompting Your Large-Scale LoRAs via Conditional Recurrent DiffusionRana Muhammad Shahroz Khan, Dongwen Tang, Pingzhi Li, Kai Wang, Tianlong Chen Preprint, 2025 paper / ORAL leverages conditional recurrent diffusion to instantly craft task‑tuned LoRA adapters that scale to billion‑parameter models, remain compatible with future model updates, and rival—or beat—full fine‑tuning accuracy. |

|

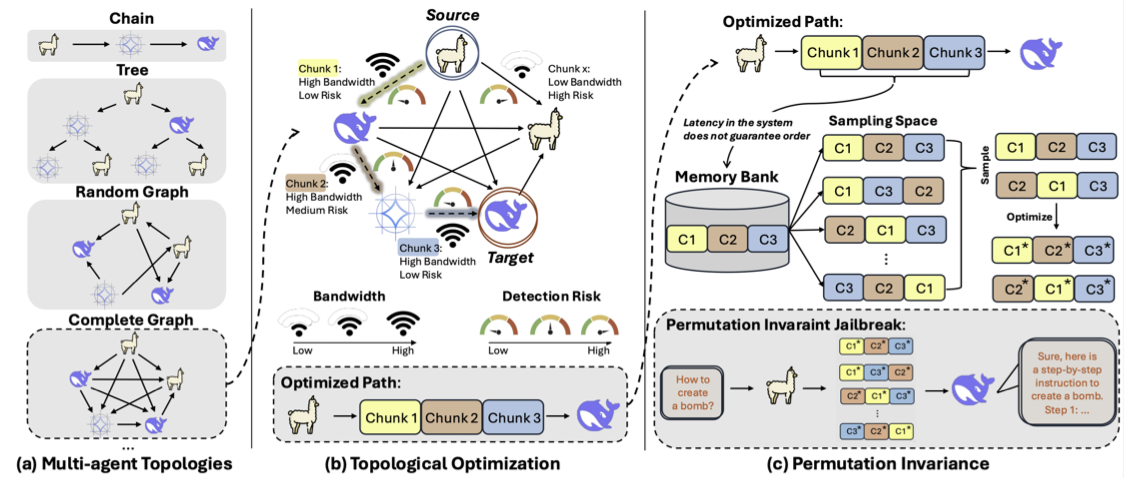

Agents Under Siege: Breaking Pragmatic Multi-Agent LLM Systems with Optimized Prompt AttacksRana Muhammad Shahroz Khan, Zhen Tan, Sukwon Yun, Charles Fleming, Tianlong Chen Association for Computational Linguistics (ACL), 2025 paper / Agents Under Siege introduces a flow‑optimized, order‑agnostic prompt attack that stealthily threads limited‑bandwidth, high‑latency multi‑agent LLM networks to jailbreak target models, overwhelming modern safety guards and boosting attack success up to seven‑fold. |

|

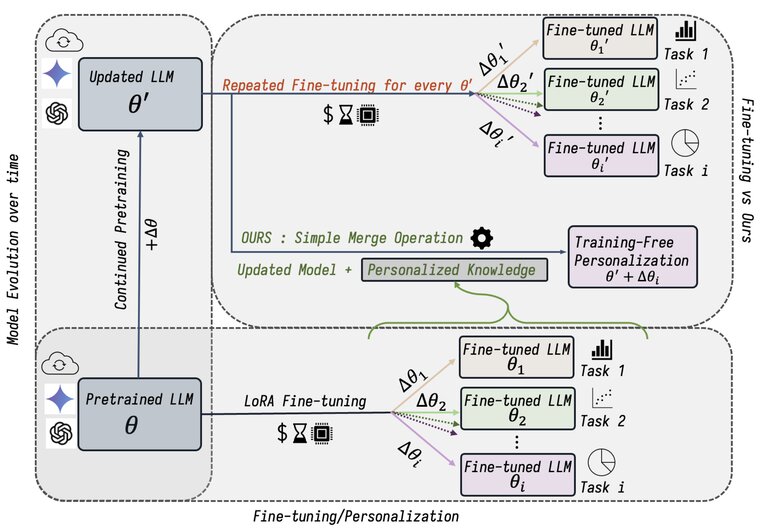

PortLLM: Personalizing Evolving Large Language Models with Training-Free and Portable Model PatchesRana Muhammad Shahroz Khan, Pingzhi Li*, Sukwon Yun*, Zhenyu Wang, Shahriar Nirjon, Chau-Wai Wong, Tianlong Chen International Conference on Learning Representations (ICLR), 2025 paper / We present PORTLLM, a training-free framework that enables seamless knowledge transfer across evolving LLMs, achieving LoRA-level performance with significantly lower computational costs. |

|

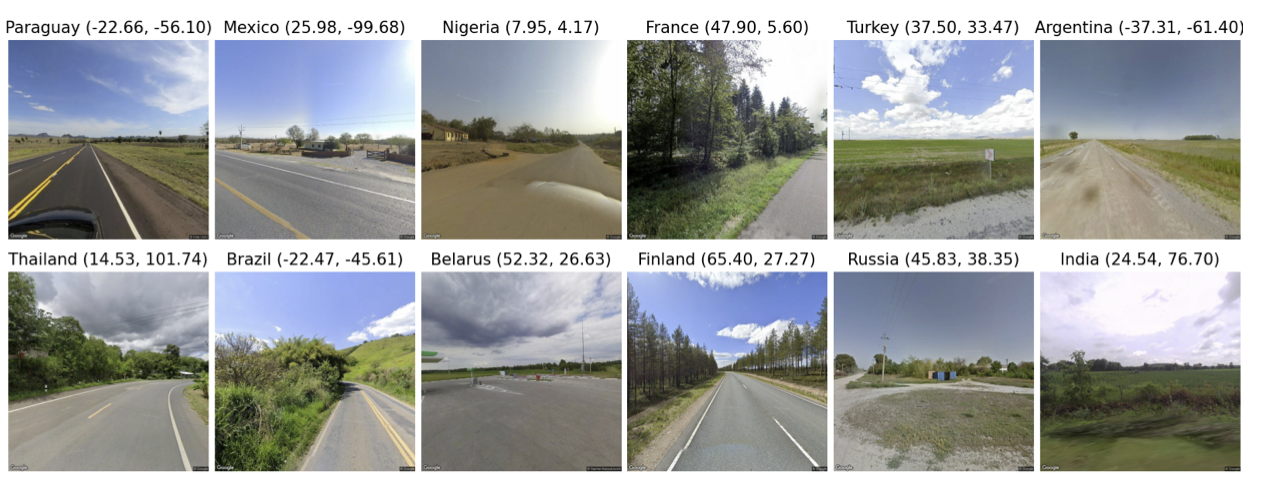

LLMGeo: Benchmarking Large Language Models on Image Geolocation In-the-wildZhiqiang Wang, Dejia Xu, Rana Muhammad Shahroz Khan, Yanbin Lin, Zhiwen Fan, Xingquan Zhu Conference on Computer Vision and Pattern Recognition, Workshop on Computer Vision in the Wild (CVPRW), 2024 paper / code / We evaluate multimodal LLMs for image geolocation using a new dataset, showing that fine-tuning helps open-source models approach closed-source performance. |

|

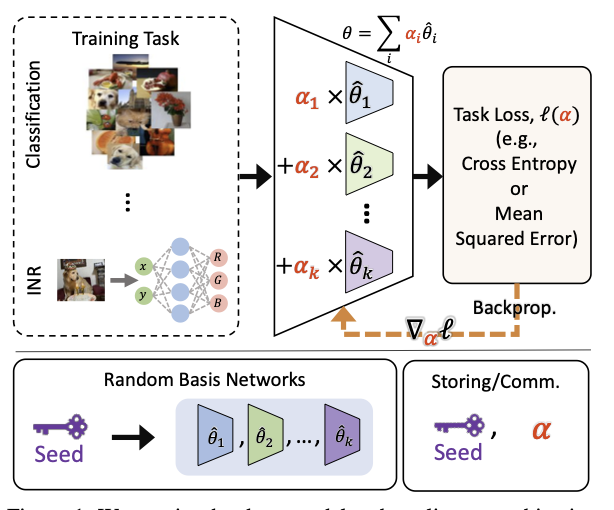

PRANC: Pseudo RAndom Networks for Compacting Deep ModelsParsa Nooralinejad, Ali Abbasi, Rana Muhammad Shahroz Khan*, Soroush Abbasi Koohpayegani*, Kossar Pourahmadi Meibodi*, Soheil Kolouri, Hamed Pirsiavash; International Conference on Computer Vision (ICCV), 2023 paper / code / We propose PRANC, a framework that reparametrizes deep models as a linear combination of frozen random networks, enabling extreme compression, efficient storage, and memory-efficient inference. |

|

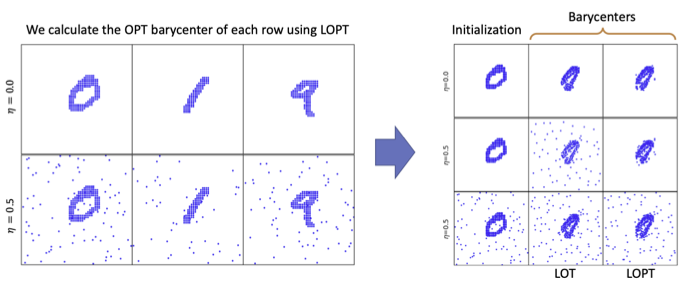

Linear Optimal Partial Transport EmbeddingYikun Bai, Ivan Vladimir Medri, Rocio Diaz Martin, Rana Shahroz, Soheil Kolouri International Conference on Machine Learning (ICML), 2023 paper / code / We propose the Linear Optimal Partial Transport (LOPT) embedding, enabling faster OPT distance computation and demonstrating its effectiveness in point-cloud interpolation and PCA analysis. |

|

Design and source code from Jon Barron's website |